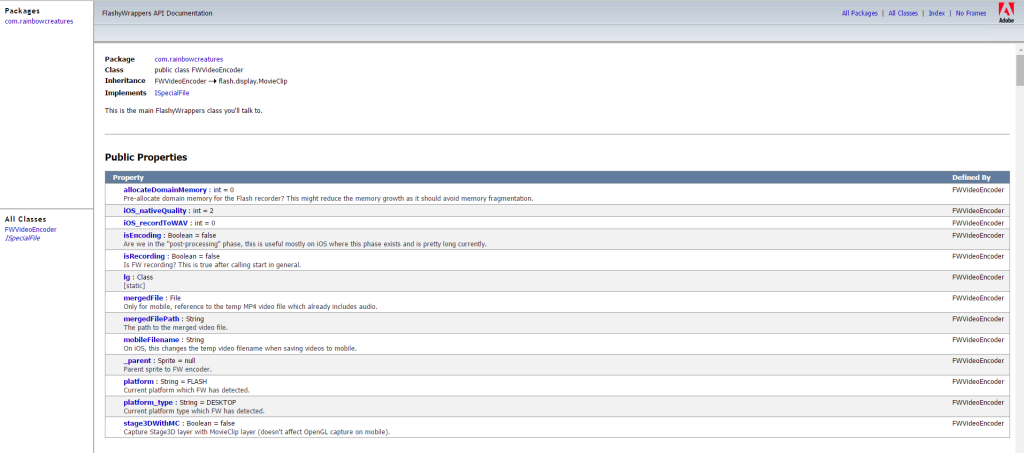

It’s the complete API doc, both offline and online – coming up soon!

Some of the other changes coming up:

– Capture rectangular area of target DisplayObject on mobile in OpenGL mode (to workaround the unability to capture DisplayObjects in this mode).

– API giving you better hints to avoid non-sensical or dangerous parameters when initializing the encoder.

– Video replay classes for desktop & mobile.

– Implementing new video recording API’s for mobile allowing you to leverage the latest in mobile video recording on iOS / Android while still supporting the low-level video recording API’s.

– Better audio support through FWSoundMixer & addAudioSoundtrack

– Improving Android.

And more…

Yes, yes, yes, yes, yes, yes and yes 😀

In the current version of FW, is there any way to playback the merged mp4-file in a mobile app?

Hi Jakob, there is a “standard” way to play any mp4 file in AIR app – usually done using NetStream and then either Video, StageVideo or VideoTexture. I recommend googling that. FW doesn’t have a class to help you with this currently(sort of one-liner magic command), though it is planned to be included.

The docs say that: On mobile, this means capturing everything including Stage3D / StageVideo or similar layers. ”

But when I capture in fullscreen mode, I only get a black rectangle, where the StageVideo should have been. Have I missed something?

Sorry that is a mistake in the docs. StageVideo is not captured because it is on a different layer than DisplayObject list / Stage3D.

However, if you want to capture playing video (and keep it accelerated), the best & recommended way to go is to use Stage3D with VideoTexture. Or in worst case, Video object. Both of these will be captured by FW.